1940: Nimatron

The Nimatron is an early relay-based computer game machine, although its impact on later developments in digital computers and computer games was, at best, negligible. It was devised by nuclear scientist Dr. Edward Uhler Condon in the winter of 1939/1940, and realised with assitance from Gerald L. Tawney and Willard A. Derr.

Condon had been appointed the Associate Director of Research at Westinghouse Electric in 1937. His plan to realise the game Nim in circuits came from the realisation that the same scaling circuits used by Geiger counters could be used to represent the numbers defining the game’s state (for more information about the game Nim, see the next article). The project was supposed to liven up Westinghouse’s public exhibition during the second year of the 1939-1940 New York World Fair. (The game was later on exhibit at the Buell Planetarium in Pittsburgh.)

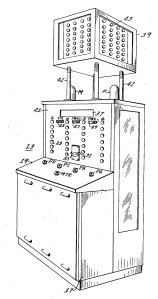

The game was housed in a large cabinet with an elevated box, on all four sides of which a series of lightbanks displayed the current game state to the audience. Patrons were challenged to beat the machine, using buttons to choose how many lights to extinguish from which lightbank (equivalent to picking up matches in traditional Nim) before giving the machine its turn. Condon recollected that about 50,000 people might have played the game during the 6 months it was on display, although few ever beat it.

The game was housed in a large cabinet with an elevated box, on all four sides of which a series of lightbanks displayed the current game state to the audience. Patrons were challenged to beat the machine, using buttons to choose how many lights to extinguish from which lightbank (equivalent to picking up matches in traditional Nim) before giving the machine its turn. Condon recollected that about 50,000 people might have played the game during the 6 months it was on display, although few ever beat it.

A very interesting side-note is that the machine had a built-in delay before it made its moves. It was thought that human players, who had to think before making their next move, would feel embarrassed if the machine in turn took only a fraction of a second for its own decisions. The machine was made to look like it had to think about its move for a few seconds, in order to not insult its human opponents. Condon figured that this might have been the first deliberate slowing-down of a computer.

Since simple strategies allow playing a perfect game of Nim, it was also decided to have the machine play only a certain number of predetermined games, so that there would be some chance of beating it. Players who were adamant that the machine could not be beaten were proven wrong by operators’ demonstrations, who had learned the games by heart.

Despite its success as a public attraction, Condon considered the machine the biggest failure of his career—because he did not realise the underlying potential. The patent filed included a description of the internal representation of numbers, a concept which proved to be universally important in the computer revolution that was just around the corner. Since the Nimatron was only ever built as an amusement device, neither Condon nor other Westinghouse managers realised what they had on their hands. The machine, now missing and probably long disassembled, was almost completely forgotten.

Next page: 1941 – Nim machine

« Return to Part I — Prehistory

References

- Peter Goodeve’s NIMROD page

- Niels Bohr Library & Archives: Oral History Transcript – Dr. Edward U. Condon

- U.S. patent 2,215,544: Machine to Play Game of Nim

- Desert Hat – Early(er) Computer Games: Babbage and Nimatron

- Picture: U.S. patent 2,215,533 (Public domain)